But a wide range of traditions see human beings differently - from ancient Buddhist metaphysics to modern social psychology, to feminist philosophers of personhood. And that might seem like a good thing for algorithms to try and measure. It’s natural to feel like there’s an essence inside each one of us, that’s expressed through how we act. How do algorithms change our stories about who people are? I’m starting a new free weekly newsletter - TOTALLY HUMAN - to explore this from all kinds of angles. Voices in the Code: A Story about People, Their Values, and the Algorithm They Made If you're into Twitter, I did a thread here that unpacks a bit of the story: Also: I got some nifty bookplates made, and if you send me a heads up with your mailing address, I’ll be glad to mail you an inscription you can peel and stick on to the first page. You can pre-order it now on Amazon, and if you were to, I’d be most grateful. I’m not a medical person, but I came away really inspired by this corner where high technology and democracy seem to have made friends.Īnyway, the book is short and there’s some very real human drama, mixed in with the big ideas. That was a re-design of the kidney transplant system, the software that decides who gets each organ. The book looks at one such case, a time when people did manage to improve the moral logic of an algorithm. There are lots of ideas about how to change this, and make technology more democratic - public hearings, audits, transparency - but few real examples of those tools actually working.

Programmers or other experts end up making decisions that really belong to a wider community, choices about how we’re going to live. Those are moral choices, but when technology gets involved, things often become less democratic, less accountable. Preorders here: Software is shaping our lives in all kinds of ways - deciding who gets hired, which schools a child can attend, even who goes to jail.

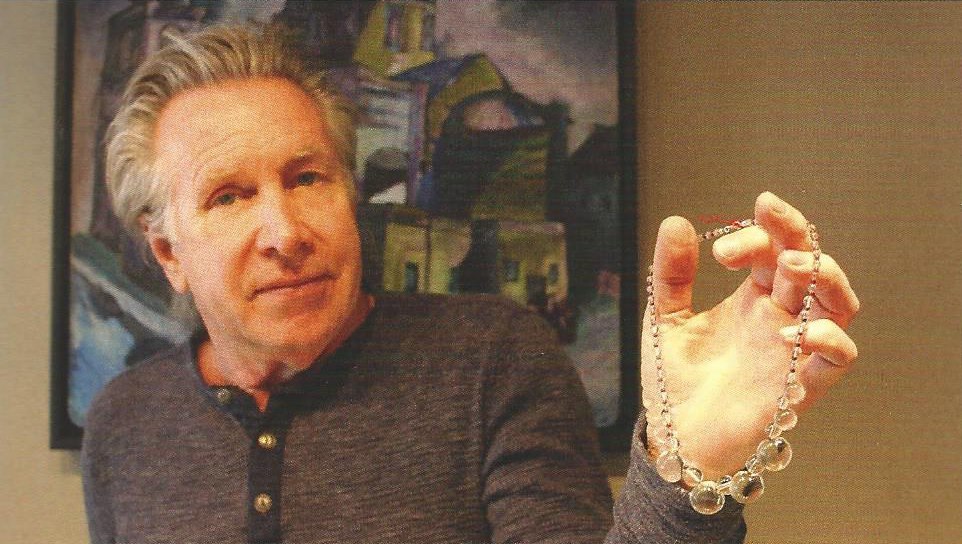

I'm thrilled to say my book, Voices in the Code, comes out September 8.

0 kommentar(er)

0 kommentar(er)